How realistic is it really that a script that you didn’t even write could dramatically slow down your site and other major sites as well? Keep reading….scripts can slow down sites and it hurts to watch!

I watched the Fluent talk by Steve Souders from 2012 about High Performance Snippets (must-watch for all SPOF fans) and got inspired to test out how an “innocent” 3rd party script (btw. I call them 3rd party monsters), not loaded properly could result in a single point of failure (SPOF) and to make a site very slooooooow to load.

Developers are always proud and optimistic about their code, and when it comes to including 3rd party scripts, basically code they don’t usually touch, they assume those 3rd party providers like Google never go down, a non-responsive ad server like DoubleClick won’t hurt or Twitter won’t have server failures. 3rd party script developers try their best to make it easy and painless for us to include their high performing scripts into our sites. That’s a fact. True but also not true. They can only do so much. If you don’t properly include the script on your end and their service goes down, their high performing code won’t be able to help you at all. The rule of thumb is to include those scripts asynchronously. That way you make sure that your content won’t be blocked from rendering in case the 3rd party service is down.

However, scripts that use document.write can’t be loaded asynchronously (unfortunately). Read more about this in the great Krux post and some of Steve Souders’ posts.

It’s kind of like the elephant in the room to me; you pray e.g. that Twitter doesn’t go down meanwhile you are too afraid to test it out or are over-confident that it won’t break your page or you basically don’t really know how you would test this scenario in the first place. Am I right? Well, what if you could run a quick test on a web site and pretend all of their 3rd party scripts and providers were down. Let’s play the “3rd party scripts game“: would your web site still render…how confident are you?

Simulating SPOF – Slow down your own site until it really hurts

Are you ready for this? First, edit your hosts file to point to a blackhole IP address for simulation (I used the blackhole IP address Steve shared in his talk on slide 9).

sudo vi /etc/hosts

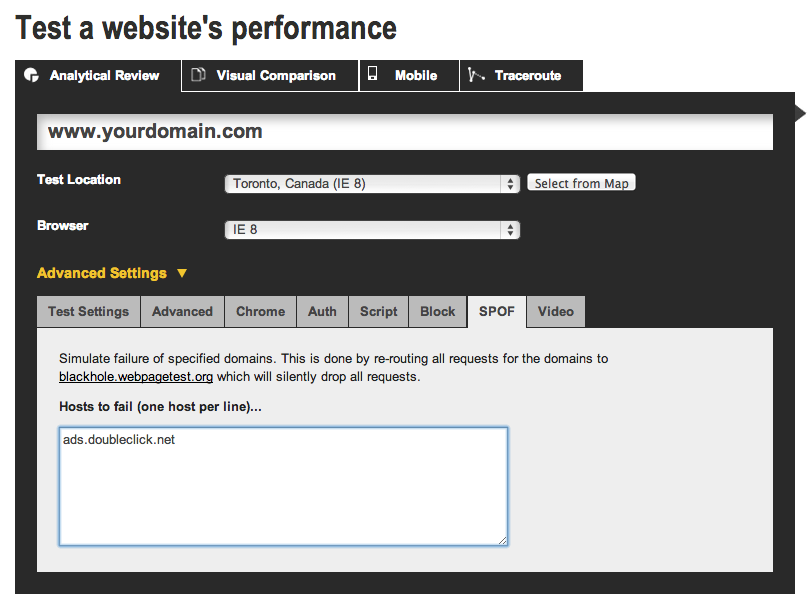

While setting up my test, I don’t want to play the really bad gal (yet) and assume all 3rd party providers were down. I’d like to start with the simplest but yet most used and harmful domain ads.doubleclick.net. A lot of web sites include ads and use DoubleClick.

So let’s use this domain for our blackhole test. By all means, you can add more 3rd party scripts to your hosts file.

// add this line to your hosts file

72.66.115.13 ads.doubleclick.net

Once you’ve updated your host file, remember to flush your DNS cache after.

dscacheutil -flushcache

Now, open your browser (with cache disabled so your browser is not using any DoublClick scripts from the cache). Type in your site’s URL and be prepared for the worst. How long will it take for the website to load?

That’s a very easy (scary) and quick way of evaluating what is on your critical rendering path and obviously (now) what should not be on it anymore!

I ran this test on our site and let me tell you, it hurt. Period. It took almost 1.5 min for cbc.ca to display useful content faking that DoubleClick was down. The browser finally gave up.

I wasn’t ready to stop the game. I wondered if it’s just our site that doesn’t properly handle the outage of one single domain such as ads.doubleclick.net. So I continued and tried the following random websites and measured the time it took so see useful content on those.

| URL | Time past to see useful content |

| www.people.com | ~4.5 mins |

| www.bbc.com | ~2.5 mins |

| www.amazon.com | Fine, didn’t seem to use DoubleClick |

| www.cnn.com | Fine, they seemed to be doing the proper handling |

| www.facebook.com | Surprise, surprise Facebook doesn’t use DoubleClick. They use their own, so no real delay here. |

If you don’t want to edit your hosts file and want to get more concrete waterfall and timing information as well as video captioning, try out what Steve Souders suggested in his Fluent talk by using the scripts (now SPOF) box at webpagetest.org to include DNS changes. The results will give you great details on how the website performed, with and without SPOF.

Note: I’ve tried WebPagetest SPOF myself and didn’t notice a big difference between non-SPOF and SPOF version; my suspicion is that WebPagetest might not be using empty cache for SPOFs setting. The tests I ran manually on my local machine showed more visible negative impact of the SPOFs (I shall confirm this).

3rd party scripts are everywhere

It was verified last month that 18% of the world’s top 300K URLs load jQuery from Google hosted libraries. So that means in theory if that service goes down and a web site uses JQuery from ajax.googleapis.com (and doesn’t have a fallback), the site might not work at all. Isn’t that scary? If you develop for a web site that already uses a CDN, don’t use Google’s CDN for scripts like JQuery. Avoid those 3rd party dependencies as much as possible.

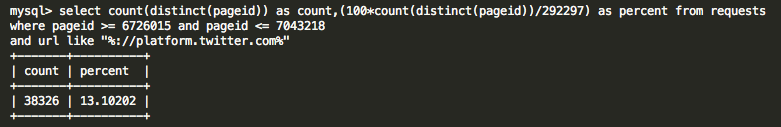

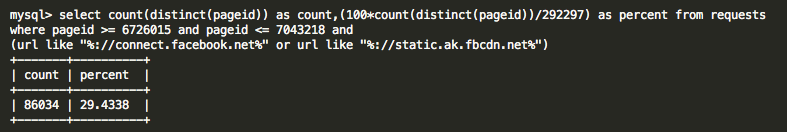

I ran two queries on my local HTTP Archive database (dump from March 2013) and followed the same filter that Steve Souders used above. I restricted the query to only look at 292, 297 distinct URLs from the March 1 2013 crawl (with their respective unique pageid’s). I wanted to see how many of the top 300K URLs use Twitter widgets and any sort of Facebook scripts (without a distinction if they were loaded synchronously or asynchronously).

13% of the Top 300K URLs include Twitter scripts somewhere on their page.

29% of the Top 300K URLs include Facebooks scripts somewhere on their page.

Feel free to extend this exercise to include more 3rd party domains.

Cached 3rd party scripts

You can’t really rely much on the cache settings of your 3rd party scripts to ignore their outage if it happens for less than a few hours. 3rd party providers tend to set a very low cache time on their scripts to make it flexible for them to change the file frequently.

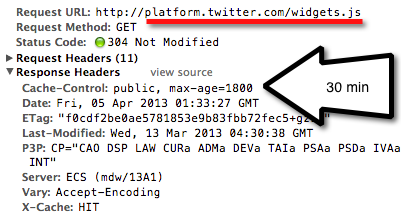

That setup plays against you in the case where you don’t load 3rd party scripts asynchronously. For example Twitter’s widget.js has a cache time set to 30 mins (only). I wonder what change could be so important for Twitter that can’t wait for more than 30min to be loaded on sites consuming this widget.js file.

So imagine the following: You go to a site with the Twitter widget loaded synchronously at the top of the page (bad!) at 9 AM (getting the latest, freshest version of widget.js). Twitter goes down at 9:10 AM. You go back to the site you visited at 9 AM, now at 9:15 AM, everything is still fine, you won’t see any problems because you are getting the cached Twitter widget script from the browser cache. What if Twitter is still down at 9.40 AM and you visit the same page again, you now are past the cache modified time and your browser will request a new version of the Twitter script, trying to reach the Twitter server that is still down. You are now getting a time out response for the Twitter script that (with the setup described above) will block the page content from rendering. Bottom line, you wouldn’t be able to see any content until Twitter is back up (and the cache has expired). It’s easy to check those cache times yourself, e.g. use Chrome dev tools and check out the response headers from those 3rd party scripts.

The screenshot below shows Twitter and Facebook’s cache-control settings:

Conclusion

In order to really focus on your site’s performance, you need to isolate (potential bad) performance of 3rd party monsters (the ones that you decided to invite to your site). Don’t make your users wait for your own content if a 3rd party provider is down.

References

- The slides to Steve Souders’ excellent talk can be found here and here

- Pat Meenan wrote a great article a little while ago about this as well

- Blackhole Server

2 comments for “Simulating Frontend SPOF – The day a tiny 3rd party script almost slowed down the entire Internet”